OCI Kubernetes Engine (OKE) Terraform Module

Introduction

This module automates the provisioning of an OKE cluster.

The documentation here is for 5.x only. The documentation for earlier versions can be found on the GitHub repo on the relevant branch.

News

December 4, 2024: Announcing v5.2.2

- feat: add support to reference module nsgs in the nsg rules

November 18, 2024: Announcing v5.2.1

- fix: operator custom cloud-init error by @mcouto-sossego in #950

- feat: added rules to allow UDP to be used for node port ranges by @robo-cap in #961

November 7, 2024: Announcing v5.2.0

- Add support for stateless rules

- Fix KMS policy - cluster dependency

- Add cluster addon support

- Allow cloud-init update for nodepools

- Add several improvements and fixes

- Cilium extension upgrade to 1.16

- Fix pod nsg bug

July 9, 2024: Announcing v5.1.8

- allow user to add additional rules to the workers NSG

- docs: updated main page, mdbook component versions

- Add support to ignore_initial_pool_size attribute on nodepools

May 9 2023: Announcing release v4.5.9

- Make the default freeform_tags empty

- Use lower bound version specification for oci provider

Related Documentation

- VCN Module Documentation

- Bastion Module Documentation

- Operator Module Documentation

- DRG Module Documentation

Changelog

View the CHANGELOG.

Security

Please consult the security guide for our responsible security vulnerability disclosure process

License

Copyright (c) 2019-2023 Oracle and/or its affiliates.

Released under the Universal Permissive License v1.0 as shown at https://oss.oracle.com/licenses/upl/.

Getting started

This module automates the provisioning of an OKE cluster.

The documentation here is for 5.x only. The documentation for earlier versions can be found on the GitHub repo.

Usage

Clone the repo

Clone the git repo:

git clone https://github.com/oracle-terraform-modules/terraform-oci-oke.git tfoke

cd tfoke

Create

- Create 2 OCI providers and add them to providers.tf:

provider "oci" {

fingerprint = var.api_fingerprint

private_key_path = var.api_private_key_path

region = var.region

tenancy_ocid = var.tenancy_id

user_ocid = var.user_id

}

provider "oci" {

fingerprint = var.api_fingerprint

private_key_path = var.api_private_key_path

region = var.home_region

tenancy_ocid = var.tenancy_id

user_ocid = var.user_id

alias = "home"

}

- Initialize a working directory containing Terraform configuration files, and optionally upgrade module dependencies:

terraform init --upgrade

- Create a terraform.tfvars and provide the necessary parameters:

# Identity and access parameters

api_fingerprint = "00:ab:12:34:56:cd:78:90:12:34:e5:fa:67:89:0b:1c"

api_private_key_path = "~/.oci/oci_rsa.pem"

home_region = "us-ashburn-1"

region = "ap-sydney-1"

tenancy_id = "ocid1.tenancy.oc1.."

user_id = "ocid1.user.oc1.."

# general oci parameters

compartment_id = "ocid1.compartment.oc1.."

timezone = "Australia/Sydney"

# ssh keys

ssh_private_key_path = "~/.ssh/id_ed25519"

ssh_public_key_path = "~/.ssh/id_ed25519.pub"

# networking

create_vcn = true

assign_dns = true

lockdown_default_seclist = true

vcn_cidrs = ["10.0.0.0/16"]

vcn_dns_label = "oke"

vcn_name = "oke"

# Subnets

subnets = {

bastion = { newbits = 13, netnum = 0, dns_label = "bastion", create="always" }

operator = { newbits = 13, netnum = 1, dns_label = "operator", create="always" }

cp = { newbits = 13, netnum = 2, dns_label = "cp", create="always" }

int_lb = { newbits = 11, netnum = 16, dns_label = "ilb", create="always" }

pub_lb = { newbits = 11, netnum = 17, dns_label = "plb", create="always" }

workers = { newbits = 2, netnum = 1, dns_label = "workers", create="always" }

pods = { newbits = 2, netnum = 2, dns_label = "pods", create="always" }

}

# bastion

create_bastion = true

bastion_allowed_cidrs = ["0.0.0.0/0"]

bastion_user = "opc"

# operator

create_operator = true

operator_install_k9s = true

# iam

create_iam_operator_policy = "always"

create_iam_resources = true

create_iam_tag_namespace = false // true/*false

create_iam_defined_tags = false // true/*false

tag_namespace = "oke"

use_defined_tags = false // true/*false

# cluster

create_cluster = true

cluster_name = "oke"

cni_type = "flannel"

kubernetes_version = "v1.29.1"

pods_cidr = "10.244.0.0/16"

services_cidr = "10.96.0.0/16"

# Worker pool defaults

worker_pool_size = 0

worker_pool_mode = "node-pool"

# Worker defaults

await_node_readiness = "none"

worker_pools = {

np1 = {

shape = "VM.Standard.E4.Flex",

ocpus = 2,

memory = 32,

size = 1,

boot_volume_size = 50,

kubernetes_version = "v1.29.1"

}

np2 = {

shape = "VM.Standard.E4.Flex",

ocpus = 2,

memory = 32,

size = 3,

boot_volume_size = 150,

kubernetes_version = "v1.29.1"

}

}

# Security

allow_node_port_access = false

allow_worker_internet_access = true

allow_worker_ssh_access = true

control_plane_allowed_cidrs = ["0.0.0.0/0"]

control_plane_is_public = false

load_balancers = "both"

preferred_load_balancer = "public"

- Run the plan and apply commands to create OKE cluster and other components:

terraform plan

terraform apply

You can create a Kubernetes cluster with the latest version of Kubernetes available in OKE using this terraform script.

Connect

NOTE: TODO Add content

kubectl is installed on the operator host by default and the kubeconfig file is set in the default location (~/.kube/config) so you don't need to set the KUBECONFIG environment variable every time you log in to the operator host.

The instance principal of the operator must be granted MANAGE on target cluster for configuration of an admin user context.

An alias "k" will be created for kubectl on the operator host.

If you would like to use kubectl locally, first install and configure OCI CLI locally. Then, install kubectl and set the KUBECONFIG to the config file path.

export KUBECONFIG=path/to/kubeconfig

To be able to get the kubeconfig file, you will need to get the credentials with terraform and store in the preferred storage format (e.g: file, vault, bucket...):

# OKE cluster creation.

module "oke_my_cluster" {

#...

}

# Obtain cluster Kubeconfig.

data "oci_containerengine_cluster_kube_config" "kube_config" {

cluster_id = module.oke_my_cluster.cluster_id

}

# Store kubeconfig in vault.

resource "vault_generic_secret" "kube_config" {

path = "my/cluster/path/kubeconfig"

data_json = jsonencode({

"data" : data.oci_containerengine_cluster_kube_config.kube_config.content

})

}

# Store kubeconfig in file.

resource "local_file" "kube_config" {

content = data.oci_containerengine_cluster_kube_config.kube_config.content

filename = "/tmp/kubeconfig"

file_permission = "0600"

}

Update

NOTE: TODO Add content

Destroy

Run the below command to destroy the infrastructure created by Terraform:

terraform destroy

You can also do targeted destroy e.g.

terraform destroy --target=module.workers

Prerequisites

This section will guide you through the prerequisites before you can use this project.

Identity and Access Management Rights

The Terraform user must have the following permissions to:

- MANAGE dynamic groups (instance_principal and KMS integration)

- MANAGE cluster-family in compartment

- MANAGE virtual-network-family in compartment

- MANAGE instance-family in compartment

Install Terraform

Start by installing Terraform and configuring your path.

Download Terraform

- Open your browser and navigate to the Terraform download page. You need version 1.0.0+.

- Download the appropriate version for your operating system

- Extract the the contents of compressed file and copy the terraform binary to a location that is in your path (see next section below)

Configure path on Linux/macOS

Open a terminal and enter the following:

# edit your desired path in-place:

sudo mv /path/to/terraform /usr/local/bin

Configure path on Windows

Follow the steps below to configure your path on Windows:

- Click on 'Start', type 'Control Panel' and open it

- Select System > Advanced System Settings > Environment Variables

- Select System variables > PATH and click 'Edit'

- Click New and paste the location of the directory where you have extracted the terraform.exe

- Close all open windows by clicking OK

- Open a new terminal and verify terraform has been properly installed

Testing Terraform installation

Open a terminal and test:

terraform -v

Generate API keys

Follow the documentation for generating keys on OCI Documentation.

Upload your API keys

Follow the documentation for uploading your keys on OCI Documentation.

Note the fingerprint.

Create an OCI compartment

Follow the documentation for creating a compartment.

Obtain the necessary OCIDs

The following OCIDs are required:

- Compartment OCID

- Tenancy OCID

- User OCID

Follow the documentation for obtaining the tenancy and user ids on OCI Documentation.

To obtain the compartment OCID:

- Navigate to Identity > Compartments

- Click on your Compartment

- Locate OCID on the page and click on 'Copy'

If you wish to encrypt Kubernetes secrets with a key from OCI KMS, you also need to create a vault and a key and obtain the key id.

Configure OCI Policy for OKE

Follow the documentation for to create the necessary OKE policy.

Deploy the OKE Terraform Module

Prerequisites

- Required Keys and OCIDs

- Required IAM policies

git,sshclient to run locally- Terraform

>= 1.2.0to run locally

Provisioning from an OCI Resource Manager Stack

Network

Network resources configured for an OKE cluster.

The following resources may be created depending on provided configuration:

Cluster

An OKE-managed Kubernetes cluster.

The following resources may be created depending on provided configuration:

- core_network_security_group

- core_network_security_group_security_rule

- core_instance (operator)

- containerengine_cluster

Node Pool

A standard OKE-managed pool of worker nodes with enhanced feature support.

Configured with mode = "node-pool" on a worker_pools entry, or with worker_pool_mode = "node-pool" to use as the default for all pools unless otherwise specified.

You can set the image_type attribute to one of the following values:

oke(default)platformcustom.

When the image_type is equal to oke or platform there is a high risk for the node-pool image to be updated on subsequent terraform apply executions because the module is using a datasource to fetch the latest images available.

To avoid this situation, you can set the image_type to custom and the image_id to the OCID of the image you want to use for the node-pool.

The following resources may be created depending on provided configuration:

Virtual Node Pool

An OKE-managed Virtual Node Pool.

Configured with mode = "virtual-node-pool" on a worker_pools entry, or with worker_pool_mode = "virtual-node-pool" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

Instance

A set of self-managed Compute Instances for custom user-provisioned worker nodes not managed by an OCI pool, but individually by Terraform.

Configured with mode = "instance" on a worker_pools entry, or with worker_pool_mode = "instance" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance

Instance Pool

A self-managed Compute Instance Pool for custom user-provisioned worker nodes.

Configured with mode = "instance-pool" on a worker_pools entry, or with worker_pool_mode = "instance-pool" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance_configuration

- core_instance_pool

Cluster Network

A self-managed HPC Cluster Network.

Configured with mode = "cluster-network" on a worker_pools entry, or with worker_pool_mode = "cluster-network" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance_configuration

- core_cluster_network

User Guide

- User Guide

Topology

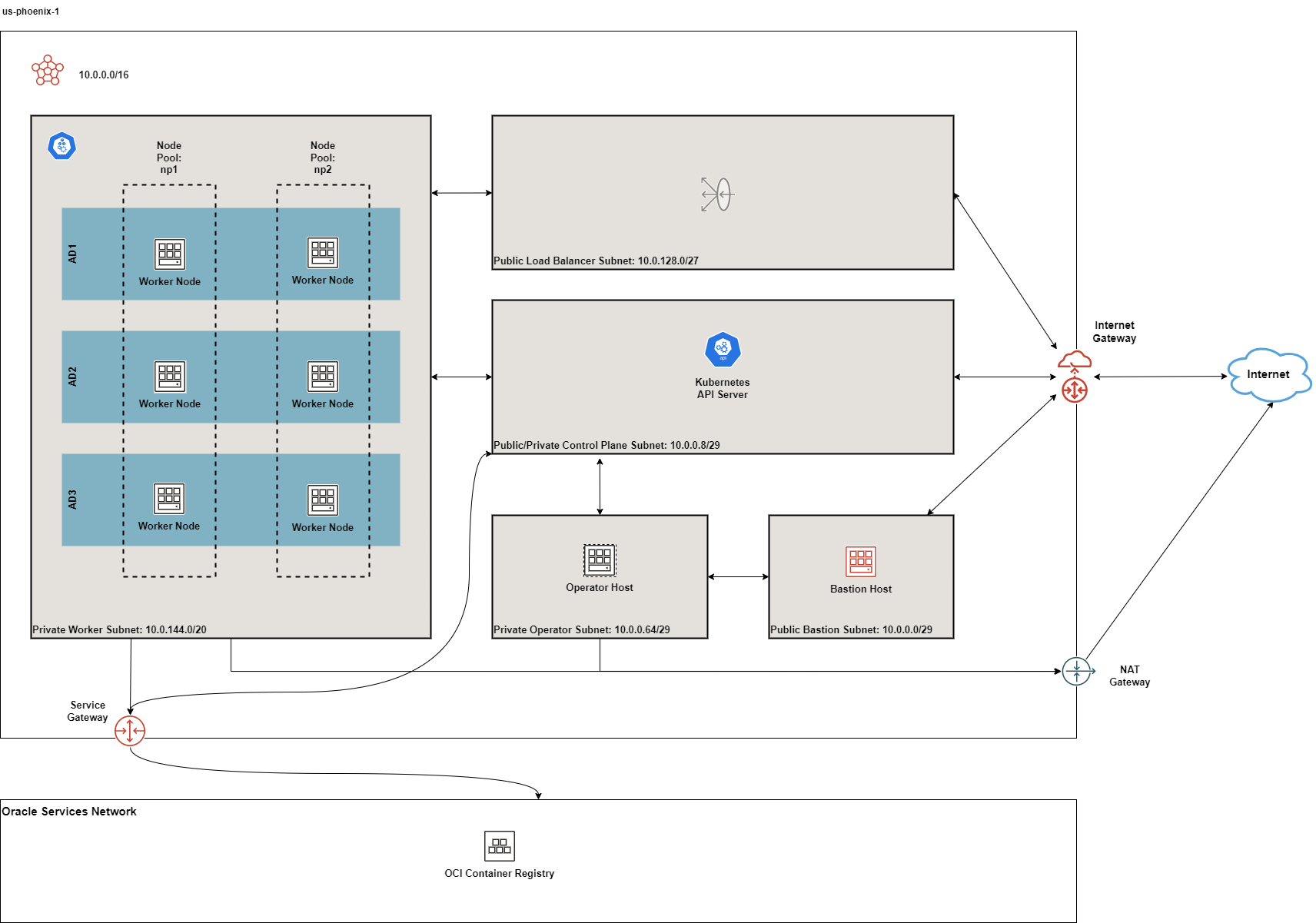

The following resources are created by default:

- 1 VCN with Internet, NAT and Service Gateways

- Route tables for Internet, NAT and Service Gateways

- 1 regional public subnet for the bastion host along with its security list

- 1 regional private subnet for the operator host along with its NSG

- 1 public control plane subnet

- 1 private regional worker subnet

- 1 public regional load balancer

- 1 bastion host

- 1 operator host

- 1 public Kubernetes Cluster with private worker nodes

- 1 Network Security Group (NSG) for each of control plane, workers and load balancers

The Kubernetes Control Plane Nodes run in Oracle's tenancy and are not shown here.

Although the recommended approach is to now deploy private clusters,we are currently keeping the default setting to public. This is to give our users the time to adjust other configurations e.g. their CI/CD tools.

The Load Balancers are only created when Kubernetes services of type LoadBalancer are deployed or you manually create Load Balancers yourself.

The diagrams below depicts the default deployment in multi-AD regions:

Figure 1: Multi-AD Default Deployment

Figure 1: Multi-AD Default Deployment

and single-AD regions:

The node pools above are depicted for illustration purposes only. By default, the clusters are now created without any node pools.

Networking and Gateways

The following subnets are created by default:

- 1 public regional control plane subnet: this subnet hosts an endpoint for the Kubernetes API server publicly accessible from the Internet. Typically, only 1 IP address is sufficient if you intend to host only 1 OKE cluster in this VCN. However, if you intend to host many OKE or Kubernetes clusters in this VCN and you intend to reuse the same subnets, you need to increase the default size of this subnet.

- 1 private regional worker subnet: this subnet hosts the worker nodes where your workloads will be running. By default, they are private. If you need admin access to the worker nodes e.g. SSH, you'll need to enable and use either the bastion host or the OCI Bastion Service.

- 1 public regional load balancer subnet: this subnet hosts your OCI Load Balancer which acts as a network ingress point into your OKE cluster.

- 1 public regional bastion subnet: this subnet hosts an optional bastion host. See additional documentation on the purpose of the bastion host.

- 1 private regional operator subnet: this subnet hosts an optional operator host that is used for admin purposes. See additional documentation on the purpose of the operator host

The bastion subnet is regional i.e. in multi-AD regions, the subnet spans all Availability Domains. By default, the bastion subnet is assigned a CIDR of 10.0.0.0/29 giving a maximum possible of 5 assignable IP addresses in the bastion subnet.

The workers subnet has a CIDR of 10.0.144.0/20 assigned by default. This gives the subnet a maximum possible of 4093 IP addresses. This is enough to scale the cluster to the maximum number of worker nodes (2000) currently allowed by Oracle Container Engine.

The load balancer subnets are of 2 types:

- public

- private

By default, only the public load balancer subnet is created. See Public and Internal Load Balancers for more details. The private load balancer subnet has a CIDR of 10.0.32.0/27 whereas the public load balancer subnet has a CIDR of 10.0.128.0/27 assigned by default. This allows both subnets to assign a maximum of 29 IP addresses and therefore 9 load balancers can be created in each. You can control the size of your subnets and have more load balancers if required by adjusting the newbit and netnum values for the subnets parameter.

The subnets parameter govern the boundaries and sizes of the subnets. If you need to change the default values, refer to the Networking Documentation to see how. We recommend working with your network administrator to design your network. The following additional documentation is useful in designing your network:

The following gateways are also created:

- Internet Gateway: this is required if the application is public-facing or a public bastion host is used

- NAT Gateway if deployed in private mode

- Service Gateway: this is required for connectivity between worker nodes and the control plane

The Service Gateway also allows OCI cloud resources without public IP addresses to privately access Oracle services and without the traffic going over the public Internet. Refer to the OCI Service Gateway documentation to understand whether you need to enable it.

Bastion Host

The bastion host is created in a public regional subnet. You can create or destroy it anytime with no effect on the Kubernetes cluster by setting the create_bastion_host = true in your variable file. You can also turn it on or off by changing the bastion_state to RUNNING or STOPPED respectively.

By default, the bastion host can be accessed from anywhere. However, you can restrict its access to a defined list of CIDR blocks using the bastion_access parameter. You can also make the bastion host private if you have some alternative connectivity method to your VCN e.g. using VPN.

You can use the bastion host for the following:

- SSH to the worker nodes

- SSH to the operator host to manage your Kubernetes cluster

To SSH to the bastion, copy the command that terraform outputs at the end of its run:

ssh_to_bastion = ssh -i /path/to/private_key opc@bastion_ip

To SSH to the worker nodes, you can do the following:

ssh -i /path/to/private_key -J <username>@bastion_ip opc@worker_node_private_ip

If your private ssh key has a different name or path than the default ~/.ssh/id_* that ssh expects e.g if your private key is ~/.ssh/dev_rsa, you must add it to your ssh agent:

eval $(ssh-agent -s)

ssh-add ~/.ssh/dev_rsa

Public vs Private Clusters

When deployed in public mode, the Kubernetes API endpoint is publicly accessible.

.Accessing the Kubernetes API endpoint publicly image::images/publiccluster.png[align="center"]

You can set the Kubernetes cluster to be public and restrict its access to the CIDR blocks A.B.C.D/A and X.Y.Z.X/Z by using the following parameters:

control_plane_is_public = true # *true/false

control_plane_allowed_cidrs = ["A.B.C.D/A","X.Y.Z.X/Z"]

When deployed in private mode, the Kubernetes endpoint can only be accessed from the operator host or from a defined list of CIDR blocks specified in control_plane_allowed_cidrs. This assumes that you have established some form of connectivity with the VCN via VPN or FastConnect from the networks listed in control_plane_allowed_cidrs.

The following table maps all possible cluster and workers deployment combinations:

| Workers/control plane | public | private |

|---|---|---|

| worker_type=public | X | X |

| worker_type=private | X | X |

Public vs Private worker nodes

Public workers

When deployed in public mode, all worker subnets will be deployed as public subnets and route to the Internet Gateway directly. Worker nodes will have both private and public IP addresses. Their private IP addresses will be from the range of the worker subnet they are part of whereas the public IP addresses will be allocated from Oracle's pool of public IP addresses.

If you intend to use Kubernetes NodePort services on your public workers or SSH to them, you must explicitly enable these in order for the security rules to be properly configured and allow access:

allow_node_port_access = true

allow_worker_ssh_access = true

Because of the increased attack surface area, we do not recommend running your worker nodes publicly. However, there are some valid use cases for these and you have the option to make this choice.

Private workers

When deployed in private mode, the worker subnet will be deployed as a private subnet and route to the NAT Gateway instead. This considerably reduces the surface attack area and improves the security posture of your OKE cluster as well as the rest of your infrastructure.

Irrespective of whether you run your worker nodes publicly or privately, if you ssh to them, you must do so through the bastion host or the OCI Bastion Service. Ensure you have enabled the bastion host.

Public vs. Internal Load Balancers

You can use both public and internal load balancers. By default, OKE creates public load balancers whenever you deploy services of type LoadBalancer. As public load balancers are allocated public IP addresses, they require require a public subnet and the default service load balancer is therefore set to use the public subnet pub-lb.

You can change this default behaviour and use internal load balancers instead. Internal load balancers have only private IP addresses and are not accessible from the Internet. Although you can place internal load balancers in public subnets (they just will not be allocated public IP addresses), we recommend you use a different subnet for internal load balancers.

Depending on your use case, you can also have both public and private load balancers.

Refer to the user guide on load balancers for more details.

Using Public Load Balancers

When creating a service of type LoadBalancer, you must specify the list of NSGs using OCI Load Balancer annotations e.g.:

apiVersion: v1

kind: Service

metadata:

name: acme-website

annotations:

oci.oraclecloud.com/oci-network-security-groups: "ocid1.networksecuritygroup...."

service.beta.kubernetes.io/oci-load-balancer-security-list-management-mode: "None"

spec:

type: LoadBalancer

....

Since we have already added the NodePort range to the public load balancer NSG, you can also disable the security list management and set its value to "None".

Using Internal Load Balancers

When creating an internal load balancer, you must ensure the following:

load_balancersis set tobothorinternal.

Setting the load_balancers parameter to both or internal only ensures the private subnet for internal load balancers and the required NSG is created. To set it as the default subnet for your service load balancer, set the preferred_load_balancer to internal. In this way, if you happen to use both types of Load Balancers, the cluster will preference the internal load balancer subnets instead.

Even if you set the preferred_load_balancer to internal, you still need to set the correct service annotation when creating internal load balancers. Just setting the subnet to be private is not sufficient e.g.

service.beta.kubernetes.io/oci-load-balancer-internal: "true"

Refer to OCI Documentation for further guidance on internal load balancers.

Creating LoadBalancers using IngressControllers

You may want to refer to the following articles exploring Ingress Controllers and Load Balancers for additional information:

- Experimenting with Ingress Controllers on Oracle Container Engine (OKE) Part 1

- [Experimenting with Ingress Controllers on Oracle Container Engine (OKE) Part 1]

Identity

Optional creation of Identity Dynamic Groups, Policies, and Tags.

Identity: Policies

Usage

create_iam_autoscaler_policy = "auto" // never/*auto/always

create_iam_kms_policy = "auto" // never/*auto/always

create_iam_operator_policy = "auto" // never/*auto/always

create_iam_worker_policy = "auto" // never/*auto/always

References

- Managing Dynamic Groups

- Managing Policies

- Policy Configuration for Cluster Creation and Deployment

- About Access Control and OCI Kubernetes Engine

- KMS

Identity: Tags

Usage

create_iam_tag_namespace = false // true/*false

create_iam_defined_tags = false // true/*false

tag_namespace = "oke"

use_defined_tags = false // true/*false

References

Network

Optional creation of VCN subnets, Network Security Groups, NSG Rules, and more.

Examples

Create Minimal Network Resources

TODO: ../../../examples/network/vars-network-only-minimal.auto.tfvars

# All configuration for network sub-module w/ defaults

# Virtual Cloud Network (VCN)

assign_dns = true # *true/false

create_vcn = true # *true/false

local_peering_gateways = {}

lockdown_default_seclist = true # *true/false

vcn_id = null # Ignored if create_vcn = true

vcn_cidrs = ["10.0.0.0/16"] # Ignored if create_vcn = false

vcn_dns_label = "oke" # Ignored if create_vcn = false

vcn_name = "oke" # Ignored if create_vcn = false

enable_ipv6 = false # true/*false

Create Common Network Resources

# All configuration for network sub-module w/ defaults

# Virtual Cloud Network (VCN)

assign_dns = true # *true/false

create_vcn = true # *true/false

local_peering_gateways = {}

lockdown_default_seclist = true # *true/false

vcn_id = null # Ignored if create_vcn = true

vcn_cidrs = ["10.0.0.0/16"] # Ignored if create_vcn = false

vcn_dns_label = "oke" # Ignored if create_vcn = false

vcn_name = "oke" # Ignored if create_vcn = false

enable_ipv6 = false # true/*false

# Subnets

subnets = {

bastion = { newbits = 13 }

operator = { newbits = 13 }

cp = { newbits = 13 }

int_lb = { newbits = 11 }

pub_lb = { newbits = 11 }

workers = { newbits = 2 }

pods = { newbits = 2 }

}

# Security

allow_node_port_access = true # *true/false

allow_pod_internet_access = true # *true/false

allow_worker_internet_access = false # true/*false

allow_worker_ssh_access = false # true/*false

control_plane_allowed_cidrs = ["0.0.0.0/0"] # e.g. "0.0.0.0/0"

control_plane_nsg_ids = [] # Additional NSGs combined with created

control_plane_type = "public" # public/*private

enable_waf = false # true/*false

load_balancers = "both" # public/private/*both

preferred_load_balancer = "public" # public/*private

worker_nsg_ids = [] # Additional NSGs combined with created

worker_type = "private" # public/*private

# See https://www.iana.org/assignments/protocol-numbers/protocol-numbers.xhtml

# Protocols: All = "all"; ICMP = 1; TCP = 6; UDP = 17

# Source/destination type: NSG ID: "NETWORK_SECURITY_GROUP"; CIDR range: "CIDR_BLOCK"

allow_rules_internal_lb = {

# "Allow TCP ingress to internal load balancers for port 8080 from VCN" : {

# protocol = 6, port = 8080, source = "10.0.0.0/16", source_type = "CIDR_BLOCK",

# },

}

allow_rules_public_lb = {

# "Allow TCP ingress to public load balancers for SSL traffic from anywhere" : {

# protocol = 6, port = 443, source = "0.0.0.0/0", source_type = "CIDR_BLOCK",

# },

# "Allow UDP egress to workers port range 50000-52767 from Public LBs" : {

# protocol = 17, destination_port_min = 50000, destination_port_max=52767, destination = "workers", destination_type = "NETWORK_SECURITY_GROUP"

# },

}

allow_rules_workers = {

# "Allow TCP ingress to workers for port 8080 from VCN" : {

# protocol = 6, port = 8080, source = "10.0.0.0/16", source_type = "CIDR_BLOCK",

# },

# "Allow UDP ingress to workers for port range 50000-52767 from Public LBs" : {

# protocol = 17, destination_port_min = 50000, destination_port_max=52767, source = "pub_lb", source_type = "NETWORK_SECURITY_GROUP"

# },

# "Allow TCP ingress to workers for port range 8888-8888 from existing NSG" : {

# protocol = 6, destination_port_min = 8888, destination_port_max=8888, source = "ocid1.networksecuritygroup.oc1.eu-frankfurt-1.aaaaaaaai6z4le2ji7dkpmuwff4525b734wrjlifjqkrzlr5qctgxdsyoyra", source_type = "NETWORK_SECURITY_GROUP"

# },

}

# Dynamic routing gateway (DRG)

create_drg = false # true/*false

drg_display_name = "drg"

drg_id = null

# Routing

ig_route_table_id = null # Optional ID of existing internet gateway route table

internet_gateway_id = null # Optional ID of existing internet gateway

internet_gateway_route_rules = [

# {

# destination = "192.168.0.0/16" # Route Rule Destination CIDR

# destination_type = "CIDR_BLOCK" # only CIDR_BLOCK is supported at the moment

# network_entity_id = "drg" # for internet_gateway_route_rules input variable, you can use special strings "drg", "internet_gateway" or pass a valid OCID using string or any Named Values

# description = "Terraformed - User added Routing Rule: To drg provided to this module. drg_id, if available, is automatically retrieved with keyword drg"

# },

]

igw_ngw_mixed_route_id = null # Optional ID of existing mixed route table NAT GW for IPv4 and Internet GW for IPv6

nat_gateway_id = null # Optional ID of existing NAT gateway

nat_gateway_public_ip_id = "none"

nat_route_table_id = null # Optional ID of existing NAT gateway route table

nat_gateway_route_rules = [

# {

# destination = "192.168.0.0/16" # Route Rule Destination CIDR

# destination_type = "CIDR_BLOCK" # only CIDR_BLOCK is supported at the moment

# network_entity_id = "drg" # for nat_gateway_route_rules input variable, you can use special strings "drg", "nat_gateway" or pass a valid OCID using string or any Named Values

# description = "Terraformed - User added Routing Rule: To drg provided to this module. drg_id, if available, is automatically retrieved with keyword drg"

# },

]

# Experimental

use_stateless_rules = true # Use stateless rules for security lists and network security groups instead of the default stateful rules.

# Note that the egress rule to 0.0.0.0/0 from pods and workers will be statefull independent of this setting because of security concerns.

References

Subnets

Subnets are created for core components managed within the module, namely:

- Bastion

- Operator

- Control plane (

cp) - Workers

- Pods

- Internal load balancers (

int_lb) - Public load balancers (

pub_lb)

Create new subnets (automatic)

subnets = {

bastion = { newbits = 13 }

operator = { newbits = 13 }

cp = { newbits = 13 }

int_lb = { newbits = 11 }

pub_lb = { newbits = 11 }

workers = { newbits = 2 }

pods = { newbits = 2 }

}

Create new subnets (forced)

subnets = {

bastion = {

create = "always",

netnum = 0,

newbits = 13

}

operator = {

create = "always",

netnum = 1,

newbits = 13

}

cp = {

create = "always",

netnum = 2,

newbits = 13

}

int_lb = {

create = "always",

netnum = 16,

newbits = 11

}

pub_lb = {

create = "always",

netnum = 17,

newbits = 11

}

workers = {

create = "always",

netnum = 1,

newbits = 2

}

}

Create new subnets (CIDR notation)

subnets = {

bastion = { cidr = "10.0.0.0/29" }

operator = { cidr = "10.0.0.64/29" }

cp = { cidr = "10.0.0.8/29" }

int_lb = { cidr = "10.0.0.32/27" }

pub_lb = { cidr = "10.0.128.0/27" }

workers = { cidr = "10.0.144.0/20" }

pods = { cidr = "10.0.64.0/18" }

}

Create new subnets with IPv4 and IPv6 (CIDR notation)

subnets = {

bastion = { cidr = "10.0.0.0/29", ipv6_cidr = "8, 0" }

operator = { cidr = "10.0.0.64/29", ipv6_cidr = "8, 1" }

cp = { cidr = "10.0.0.8/29", ipv6_cidr = "8, 2" }

int_lb = { cidr = "10.0.0.32/27", ipv6_cidr = "8, 3" }

pub_lb = { cidr = "10.0.128.0/27", ipv6_cidr = "8, 4" }

workers = { cidr = "10.0.144.0/20", ipv6_cidr = "2603:c020:8010:f002::/64" }

pods = { cidr = "10.0.64.0/18", ipv6_cidr = "2603:c020:8010:f003::/64" }

}

Use existing subnets

subnets = {

operator = { id = "ocid1.subnet..." }

cp = { id = "ocid1.subnet..." }

int_lb = { id = "ocid1.subnet..." }

pub_lb = { id = "ocid1.subnet..." }

workers = { id = "ocid1.subnet..." }

pods = { id = "ocid1.subnet..." }

}

References

- OCI Networking Overview

- VCNs and Subnets

- Terraform cidrsubnets function

Network Security Groups

Network Security Groups (NSGs) are used to permit network access between resources creation by the module, namely:

- Bastion

- Operator

- Control plane (

cp) - Workers

- Pods

- Internal load balancers (

int_lb) - Public load balancers (

pub_lb)

Create new NSGs

nsgs = {

bastion = {}

operator = {}

cp = {}

int_lb = {}

pub_lb = {}

workers = {}

pods = {}

}

Use existing NSGs

nsgs = {

bastion = { id = "ocid1.networksecuritygroup..." }

operator = { id = "ocid1.networksecuritygroup..." }

cp = { id = "ocid1.networksecuritygroup..." }

int_lb = { id = "ocid1.networksecuritygroup..." }

pub_lb = { id = "ocid1.networksecuritygroup..." }

workers = { id = "ocid1.networksecuritygroup..." }

pods = { id = "ocid1.networksecuritygroup..." }

}

References

Cluster

See also:

The OKE parameters concern mainly the following:

- whether you want your OKE control plane to be public or private

- whether to assign a public IP address to the API endpoint for public access

- whether you want to deploy public or private worker nodes

- whether you want to allow NodePort or ssh access to the worker nodes

- Kubernetes options such as dashboard, networking

- number of node pools and their respective size of the cluster

- services and pods cidr blocks

- whether to use encryption

- whether you want to enable dual-stack: IPv4 & IPv6

If you need to change the default services and pods' CIDRs, note the following:

- The CIDR block you specify for the VCN must not overlap with the CIDR block you specify for the Kubernetes services.

- The CIDR blocks you specify for pods running in the cluster must not overlap with CIDR blocks you specify for worker node and load balancer subnets.

Example usage

Basic cluster with defaults:

cluster_name = "oke-example"

kubernetes_version = "v1.34.1"

Enhanced cluster with extra configuration:

create_cluster = true // *true/false

cluster_dns = null

cluster_kms_key_id = null

cluster_name = "oke"

cluster_type = "enhanced" // *basic/enhanced

cni_type = "flannel" // *flannel/npn

assign_public_ip_to_control_plane = true // true/*false

image_signing_keys = []

kubernetes_version = "v1.34.1"

pods_cidr = "10.244.0.0/16"

services_cidr = "10.96.0.0/16"

use_signed_images = false // true/*false

enable_ipv6 = false //true/*false

backend_nsg_ids = ["ocid1.networksecuritygroup..."] // the workers and pods NSGs are always added.

OpenID Connect Authentication

By default, OKE clusters are set up to authenticate individuals (human users, groups or service principals) accessing the API endpoint using OCI Identity and Access Management (IAM).

Using OKE OIDC Authentication, we can authenticate OKE API Endpoint requests (from human users or service principals) using tokens issued by third-party Identity Providers without the need for federation with OCI IAM.

Prerequisites

Note the following prerequisites for enabling a cluster for OIDC authentication:

- The cluster must be an enhanced cluster. OIDC authentication is not supported for basic clusters.

- The cluster must be running Kubernetes version 1.21 (or later) -- for single external OIDC IdP setup.

- The cluster must be running Kubernetes version 1.30 (or later) -- for multiple external OIDC IdPs setup.

Configuration

In addition to the implicit OCI IAM, you can configure the OKE cluster to authenticate the cluster API endpoint requests using a single external OIDC (OpenID Connect) Identity Provider (IdP).

For this is necessary to set the following variables:

oidc_discovery_enabled = true

oidc_token_authentication_config = {

client_id = ...,

issuer_url = ...,

username_claim = ...,

username_prefix = ...,

groups_claim = ...,

groups_prefix = ...,

required_claims = [

{

key = ...,

value = ...

},

{

key = ...,

value = ...

}

],

ca_certificate = ...,

signing_algorithms = []

}

In case you're looking to authenticate the cluster API endpoint requests with multiple OIDC IdPs, you can take advantage of the authentication configuration via file Kubernetes feature.

oidc_discovery_enabled = true

oidc_token_authentication_config = {

configuration_file = base64encode(yamlencode(

{

"apiVersion" = "apiserver.config.k8s.io/v1beta1"

"kind" = "AuthenticationConfiguration"

"jwt" = [

{

"issuer"= {

"url" = "...",

"audiences" = [

"..."

],

"audienceMatchPolicy" = "MatchAny"

}

"claimMappings" = {

"username" = {

"claim" = "..."

"prefix" = ""

}

}

"claimValidationRules" = [

{

"claim" = "..."

"requiredValue" = "..."

}

]

}

]

}

))

}

The authenticated users are mapped to a User resource in Kubernetes and you have to setup the desired RBAC polices to provide access.

E.g. for Github Action workflow:

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: actions-oidc-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "watch", "list"]

- apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "watch", "list", "create", "update", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: actions-oidc-binding

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: actions-oidc-role

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: actions-oidc:repo:GH-ACCOUNT/GH-REPO:ref:refs/heads/main

Note:

- You need to make sure the OKE Control Plane endpoint is allowed to connect to the IdP.

allow_rules_cp = {

"Allow egress to anywhere HTTPS from OKE CP" : {

protocol = "6", port=443, destination = "0.0.0.0/0", destination_type = "CIDR_BLOCK",

}

}

- You cannot configure cluster OIDC Authentication using the arguments of the

oidc_token_authentication_config(client_id,issuer_url, etc..) and theconfiguration_fileat the same time.

OpenID Connect Discovery

Prerequisites

Note the following points when using OIDC Discovery:

- The cluster must be an enhanced cluster. OIDC Discovery is not supported for basic clusters.

- The cluster must be running Kubernetes version 1.21 (or later).

Configuration

OKE already supports Workload Identity to enable Kubernetes pods to access OCI resources, such as a secret or cloud storage bucket without storing access credentials in your Kubernetes cluster.

If you are looking to authorize Kubernetes pods to access non-OCI resources you can enable OKE OIDC Discovery.

When you enable OIDC discovery for an OKE cluster, OKE provides an OpenID Connect issuer endpoint. This endpoint serves the OIDC discovery document and the JSON web key set (JWKS), which contain the public key necessary for token validation. These resources enable third-party IdP to validate tokens issued for pods in the OKE cluster, allowing those pods to access non-OCI resources.

To enable the OKE OIDC Discovery, you have to set the following variable:

open_id_connect_discovery_enabled = true

The OpenID Connect issuer endpoint is available in the output:

cluster_oidc_discovery_endpoint

Example usage

OIDC Authentication setup using Kubernetes API server flags

{{#include ../../../examples/cluster-addons/vars-cluster-oidc-auth-single.auto.tfvars:4:}}

OIDC Authentication setup using Kubernetes API server configuration file

{{#include ../../../examples/cluster-addons/vars-cluster-oidc-auth-multiple.auto.tfvars:4:}}

Reference

- OKE OpenID Authetication

- OKE Cluster Terraform resource

- Github workflow OKE OIDC authentication

- Kubernetes OIDC Authentication setup using Kubernetes API server configuration file

- Kubernetes OIDC Authentication setup using Kubernetes API server flags

OpenID Connect Discovery

With OKE OIDC Discovery, it is possible to validate Kubernetes pods running on OKE clusters with third-party STS (Security Token Service) issuers, whether on-premises or in cloud service providers (CSPs) such as Amazon Web Services (AWS) and Google Cloud Platform (GCP), and authorize them to access non-OCI resources. OKE OIDC Discovery enables this integration.

Prerequisites

Note the following points when using OIDC Discovery:

- The cluster must be an enhanced cluster. OIDC Discovery is not supported for basic clusters.

- The cluster must be running Kubernetes version 1.21 (or later).

Configuration

When you enable OIDC discovery for an OKE cluster, OKE provides an OpenID Connect issuer endpoint. This endpoint serves the OIDC discovery document and the JSON web key set (JWKS), which contain the public key necessary for token validation. These resources enable third-party IdP to validate tokens issued for pods in the OKE cluster, allowing those pods to access non-OCI resources.

To enable the OKE OIDC Discovery, you have to set the following variable:

open_id_connect_discovery_enabled = true

The OpenID Connect issuer endpoint is available in the output:

cluster_oidc_discovery_endpoint

Example usage

OIDC Discovery setup using Kubernetes API server flags

{{#include ../../../examples/cluster-addons/vars-cluster-oidc-discovery.auto.tfvars:4:}}

Reference

Cluster Add-ons

With this module you can manage both essential and optional add-ons on enhanced OKE clusters.

This module provides the option to remove Essential addons and to manage, both essential & optional addons.

Cluster add-on removal (using the cluster_addons_to_remove variable) requires the creation of the operator host.

To list the available cluster add-ons for a specific Kubernetes version you can run the following oci-cli command:

oci ce addon-option list --kubernetes-version <k8s-version>

Note: For the cluster autoscaler you should choose only one of the options:

- the stand-alone cluster-autoscaler deployment, using the extension module

- the cluster-autoscaler add-on

When customizing the configuration of an existing addon, use the flag override_existing=true. Default value is false if not specified.

Example usage

cluster_addons = {

"CertManager" = {

remove_addon_resources_on_delete = true

override_existing = true # Default is false if not specified

# The list of supported configurations for the cluster addons is here: https://docs.oracle.com/en-us/iaas/Content/ContEng/Tasks/contengconfiguringclusteraddons-configurationarguments.htm#contengconfiguringclusteraddons-configurationarguments_CertificateManager

configurations = [

{

key = "numOfReplicas"

value = "1"

}

]

}

# The NvidiaGpuPlugin is disabled by default. To enable it, add the following block to the cluster_addons variable

"NvidiaGpuPlugin" = {

remove_addon_resources_on_delete = true

},

# Prevent Flannel pods from being scheduled using a non-existing label as nodeSelector

"Flannel" = {

remove_addon_resources_on_delete = true

override_existing = true # Override the existing configuration with this one, if Flannel addon in already enabled

configurations = [

{

key = "nodeSelectors"

value = "{\"addon\":\"no-schedule\"}"

}

],

},

# Prevent Kube-Proxy pods from being scheduled using a non-existing label as nodeSelector

"KubeProxy" = {

remove_addon_resources_on_delete = true

override_existing = true # Override the existing configuration with this one, if KubeProxy addon in already enabled

configurations = [

{

key = "nodeSelectors"

value = "{\"addon\":\"no-schedule\"}"

}

],

}

}

cluster_addons_to_remove = {

Flannel = {

remove_k8s_resources = true

}

}

Reference

Workers

The worker_pools input defines worker node configuration for the cluster.

Many of the global configuration values below may be overridden on each pool definition or omitted for defaults, with the worker_ or worker_pool_ variable prefix removed, e.g. worker_image_id overridden with image_id.

For example:

worker_pool_mode = "node-pool"

worker_pool_size = 1

worker_pools = {

oke-vm-standard = {},

oke-vm-standard-large = {

description = "OKE-managed Node Pool with OKE Oracle Linux 8 image",

shape = "VM.Standard.E4.Flex",

create = true,

ocpus = 8,

memory = 128,

boot_volume_size = 200,

os = "Oracle Linux",

os_version = "8",

},

}

Workers: Mode

The mode parameter controls the type of resources provisioned in OCI for OKE worker nodes.

Workers / Mode: Node Pool

A standard OKE-managed pool of worker nodes with enhanced feature support.

Configured with mode = "node-pool" on a worker_pools entry, or with worker_pool_mode = "node-pool" to use as the default for all pools unless otherwise specified.

You can set the image_type attribute to one of the following values:

oke(default)platformcustom.

When the image_type is equal to oke or platform there is a high risk for the node-pool image to be updated on subsequent terraform apply executions because the module is using a datasource to fetch the latest images available.

To avoid this situation, you can set the image_type to custom and the image_id to the OCID of the image you want to use for the node-pool.

The following resources may be created depending on provided configuration:

Usage

worker_pool_mode = "node-pool"

worker_pool_size = 1

worker_pools = {

oke-vm-standard = {},

oke-vm-standard-large = {

size = 1,

shape = "VM.Standard.E4.Flex",

ocpus = 8,

memory = 128,

boot_volume_size = 200,

create = false,

},

oke-vm-standard-ol7 = {

description = "OKE-managed Node Pool with OKE Oracle Linux 7 image",

size = 1,

os = "Oracle Linux",

os_version = "7",

create = false,

},

oke-vm-standard-ol8 = {

description = "OKE-managed Node Pool with OKE Oracle Linux 8 image",

size = 1,

os = "Oracle Linux",

os_version = "8",

},

oke-vm-standard-custom = {

description = "OKE-managed Node Pool with custom image",

image_type = "custom",

image_id = "ocid1.image...",

size = 1,

create = false,

},

}

References

- oci_containerengine_node_pool

- Modifying Node Pool and Worker Node Properties

- Adding and Removing Node Pools

Workers / Mode: Virtual Node Pool

An OKE-managed Virtual Node Pool.

Configured with mode = "virtual-node-pool" on a worker_pools entry, or with worker_pool_mode = "virtual-node-pool" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

Usage

worker_pools = {

oke-virtual = {

description = "OKE-managed Virtual Node Pool",

mode = "virtual-node-pool",

size = 1,

},

}

References

Workers / Mode: Instance

A set of self-managed Compute Instances for custom user-provisioned worker nodes not managed by an OCI pool, but individually by Terraform.

Configured with mode = "instance" on a worker_pools entry, or with worker_pool_mode = "instance" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance

Usage

worker_pools = {

oke-vm-instance = {

description = "Self-managed Instances",

mode = "instance",

size = 1,

node_labels = {

"keya" = "valuea",

"keyb" = "valueb"

},

secondary_vnics = {

"vnic-display-name" = {},

},

},

oke-vm-instance-burst = {

description = "Self-managed Instance With Bursting",

mode = "instance",

size = 1,

burst = "BASELINE_1_8", # Valid values BASELINE_1_8,BASELINE_1_2

},

}

Instance agent configuration:

worker_pools = {

oke-instance = {

agent_config = {

are_all_plugins_disabled = false,

is_management_disabled = false,

is_monitoring_disabled = false,

plugins_config = {

"Bastion" = "DISABLED",

"Block Volume Management" = "DISABLED",

"Compute HPC RDMA Authentication" = "DISABLED",

"Compute HPC RDMA Auto-Configuration" = "DISABLED",

"Compute Instance Monitoring" = "ENABLED",

"Compute Instance Run Command" = "ENABLED",

"Compute RDMA GPU Monitoring" = "DISABLED",

"Custom Logs Monitoring" = "ENABLED",

"Management Agent" = "ENABLED",

"Oracle Autonomous Linux" = "DISABLED",

"OS Management Service Agent" = "DISABLED",

}

}

},

}

References

Workers / Mode: Instance Pool

A self-managed Compute Instance Pool for custom user-provisioned worker nodes.

Configured with mode = "instance-pool" on a worker_pools entry, or with worker_pool_mode = "instance-pool" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance_configuration

- core_instance_pool

Usage

worker_pools = {

oke-vm-instance-pool = {

description = "Self-managed Instance Pool with custom image",

mode = "instance-pool",

size = 1,

node_labels = {

"keya" = "valuea",

"keyb" = "valueb"

},

secondary_vnics = {

"vnic-display-name" = {},

},

},

oke-vm-instance-pool-burst = {

description = "Self-managed Instance Pool With Bursting",

mode = "instance-pool",

size = 1,

burst = "BASELINE_1_8", # Valid values BASELINE_1_8,BASELINE_1_2

},

oke-vm-instance-pool-with-block-volume = {

description = "Self-managed Instance Pool with block volume",

mode = "instance-pool",

size = 1,

disable_block_volume = false,

block_volume_size_in_gbs = 60,

},

oke-vm-instance-pool-without-block-volume = {

description = "Self-managed Instance Pool without block volume",

mode = "instance-pool",

size = 1,

disable_block_volume = true,

},

}

Instance agent configuration:

worker_pools = {

oke-instance = {

agent_config = {

are_all_plugins_disabled = false,

is_management_disabled = false,

is_monitoring_disabled = false,

plugins_config = {

"Bastion" = "DISABLED",

"Block Volume Management" = "DISABLED",

"Compute HPC RDMA Authentication" = "DISABLED",

"Compute HPC RDMA Auto-Configuration" = "DISABLED",

"Compute Instance Monitoring" = "ENABLED",

"Compute Instance Run Command" = "ENABLED",

"Compute RDMA GPU Monitoring" = "DISABLED",

"Custom Logs Monitoring" = "ENABLED",

"Management Agent" = "ENABLED",

"Oracle Autonomous Linux" = "DISABLED",

"OS Management Service Agent" = "DISABLED",

}

}

},

}

References

Workers / Mode: Cluster Network

A self-managed HPC Cluster Network.

Configured with mode = "cluster-network" on a worker_pools entry, or with worker_pool_mode = "cluster-network" to use as the default for all pools unless otherwise specified.

The following resources may be created depending on provided configuration:

- identity_dynamic_group (workers)

- identity_policy (JoinCluster)

- core_instance_configuration

- core_cluster_network

Usage

worker_pools = {

oke-vm-standard = {

description = "Managed node pool for operational workloads without GPU toleration"

mode = "node-pool",

size = 1,

shape = "VM.Standard.E4.Flex",

ocpus = 2,

memory = 16,

boot_volume_size = 50,

},

oke-bm-gpu-rdma = {

description = "Self-managed nodes in a Cluster Network with RDMA networking",

mode = "cluster-network",

size = 1,

shape = "BM.GPU.B4.8",

placement_ads = [1],

image_id = "ocid1.image..."

cloud_init = [

{

content = <<-EOT

#!/usr/bin/env bash

echo "Pool-specific cloud_init using shell script"

EOT

},

],

secondary_vnics = {

"vnic-display-name" = {

nic_index = 1,

subnet_id = "ocid1.subnet..."

},

},

}

}

Instance agent configuration:

worker_pools = {

oke-instance = {

agent_config = {

are_all_plugins_disabled = false,

is_management_disabled = false,

is_monitoring_disabled = false,

plugins_config = {

"Bastion" = "DISABLED",

"Block Volume Management" = "DISABLED",

"Compute HPC RDMA Authentication" = "DISABLED",

"Compute HPC RDMA Auto-Configuration" = "DISABLED",

"Compute Instance Monitoring" = "ENABLED",

"Compute Instance Run Command" = "ENABLED",

"Compute RDMA GPU Monitoring" = "DISABLED",

"Custom Logs Monitoring" = "ENABLED",

"Management Agent" = "ENABLED",

"Oracle Autonomous Linux" = "DISABLED",

"OS Management Service Agent" = "DISABLED",

}

}

},

}

References

- Cluster Networks with Instance Pools

- Large Clusters, Lowest Latency: Cluster Networking on Oracle Cloud Infrastructure

- First principles: Building a high-performance network in the public cloud

- Running Applications on Oracle Cloud Using Cluster Networking

Workers / Mode: Compute Clusters

Create self-managed HPC Compute Clusters.

A compute cluster is a group of high performance computing (HPC), GPU, or optimized instances that are connected with a high-bandwidth, ultra low-latency network.

- BM.GPU.A100-v2.8

- BM.GPU.H100.8

- BM.GPU4.8

- BM.HPC2.36

- BM.Optimized3.36

Configured with mode = "compute-cluster" on a worker_pools entry, or with worker_pool_mode = "compute-cluster" to use as the default for all pools unless otherwise specified.

Compute clusters shared by multiple worker groups must be created using the variable worker_compute_clusters and should be referenced by the key in the compute_cluster attribute of the worker group.

If the worker_compute_clusters is not specified, the module will create a compute cluster per each worker group.

Usage

worker_compute_clusters = { # Use this variable to define a compute cluster you intend to use with multiple-nodepools.

"shared" = {

placement_ad = 1

}

}

worker_pools = {

oke-vm-standard = {

description = "Managed node pool for operational workloads without GPU toleration"

mode = "node-pool",

size = 1,

shape = "VM.Standard.E4.Flex",

ocpus = 2,

memory = 16,

boot_volume_size = 50,

},

compute-cluster-group-1 = {

shape = "BM.HPC2.36",

boot_volume_size = 100,

image_id = "ocid1.image.oc1..."

image_type = "custom"

mode = "compute-cluster"

compute_cluster = "shared"

instance_ids = ["1", "2", "3"] # List of instance IDs in the compute cluster. Each instance ID corresponds to a separate node in the cluster.

placement_ad = "1"

cloud_init = [

{

content = <<-EOT

#!/usr/bin/env bash

echo "Pool-specific cloud_init using shell script"

EOT

},

],

secondary_vnics = {

"vnic-display-name" = {

nic_index = 1,

subnet_id = "ocid1.subnet..."

},

},

}

compute-cluster-group-2 = {

shape = "BM.HPC2.36",

boot_volume_size = 100,

image_id = "ocid1.image.oc1..."

image_type = "custom"

mode = "compute-cluster"

compute_cluster = "shared"

instance_ids = ["a", "b", "c"] # List of instance IDs in the compute cluster. Each instance ID corresponds to a separate node in the cluster.

placement_ad = "1"

cloud_init = [

{

content = <<-EOT

#!/usr/bin/env bash

echo "Pool-specific cloud_init using shell script"

EOT

},

],

secondary_vnics = {

"vnic-display-name" = {

nic_index = 1,

subnet_id = "ocid1.subnet..."

},

},

}

compute-cluster-group-3 = {

shape = "BM.HPC2.36",

boot_volume_size = 100,

image_id = "ocid1.image.oc1..."

image_type = "custom"

mode = "compute-cluster"

instance_ids = ["001", "002", "003"] # List of instance IDs in the compute cluster. Each instance ID corresponds to a separate node in the cluster.

placement_ad = "1"

cloud_init = [

{

content = <<-EOT

#!/usr/bin/env bash

echo "Pool-specific cloud_init using shell script"

EOT

},

],

}

}

Instance agent configuration:

worker_pools = {

oke-instance = {

agent_config = {

are_all_plugins_disabled = false,

is_management_disabled = false,

is_monitoring_disabled = false,

plugins_config = {

"Bastion" = "DISABLED",

"Block Volume Management" = "DISABLED",

"Compute HPC RDMA Authentication" = "DISABLED",

"Compute HPC RDMA Auto-Configuration" = "DISABLED",

"Compute Instance Monitoring" = "ENABLED",

"Compute Instance Run Command" = "ENABLED",

"Compute RDMA GPU Monitoring" = "DISABLED",

"Custom Logs Monitoring" = "ENABLED",

"Management Agent" = "ENABLED",

"Oracle Autonomous Linux" = "DISABLED",

"OS Management Service Agent" = "DISABLED",

}

}

},

}

References

- Compute Clusters

- Large Clusters, Lowest Latency: Cluster Networking on Oracle Cloud Infrastructure

- First principles: Building a high-performance network in the public cloud

- Running Applications on Oracle Cloud Using Cluster Networking

Workers: Network

Subnets

worker_pool_mode = "node-pool"

worker_pool_size = 1

worker_subnet_id = "ocid1.subnet..."

worker_pools = {

oke-vm-custom-subnet-flannel = {

subnet_id = "ocid1.subnet..."

},

oke-vm-custom-subnet-npn = {

subnet_id = "ocid1.subnet..."

pod_subnet_id = "ocid1.subnet..." // when cni_type = "npn"

},

}

Network Security Groups

worker_pool_mode = "node-pool"

worker_pool_size = 1

worker_nsg_ids = ["ocid1.networksecuritygroup..."]

pod_nsg_ids = [] // when cni_type = "npn"

worker_pools = {

oke-vm-custom-nsgs-flannel = {

nsg_ids = ["ocid1.networksecuritygroup..."]

},

oke-vm-custom-nsgs-npn = {

nsg_ids = ["ocid1.networksecuritygroup..."]

pod_nsg_ids = ["ocid1.networksecuritygroup..."] // when cni_type = "npn"

},

}

Secondary VNICs

On pools with a self-managed mode:

worker_pool_mode = "node-pool"

worker_pool_size = 1

kubeproxy_mode = "iptables" // *iptables/ipvs

worker_is_public = false

assign_public_ip = false

worker_nsg_ids = ["ocid1.networksecuritygroup..."]

worker_subnet_id = "ocid1.subnet..."

max_pods_per_node = 110

pod_nsg_ids = [] // when cni_type = "npn"

worker_pools = {

oke-vm-custom-network-flannel = {

assign_public_ip = false,

create = false,

subnet_id = "ocid1.subnet..."

nsg_ids = ["ocid1.networksecuritygroup..."]

},

oke-vm-custom-network-npn = {

assign_public_ip = false,

create = false,

subnet_id = "ocid1.subnet..."

pod_subnet_id = "ocid1.subnet..."

nsg_ids = ["ocid1.networksecuritygroup..."]

pod_nsg_ids = ["ocid1.networksecuritygroup..."]

},

oke-vm-vnics = {

mode = "instance-pool",

size = 1,

create = false,

secondary_vnics = {

vnic0 = {

nic_index = 0,

subnet_id = "ocid1.subnet..."

},

vnic1 = {

nic_index = 1,

subnet_id = "ocid1.subnet..."

},

},

},

oke-bm-vnics = {

mode = "cluster-network",

size = 2,

shape = "BM.GPU.B4.8",

placement_ads = [1],

create = false,

secondary_vnics = {

gpu0 = {

nic_index = 0,

subnet_id = "ocid1.subnet..."

},

gpu1 = {

nic_index = 1,

subnet_id = "ocid1.subnet..."

},

},

},

}

Workers: Image

The operating system image for worker nodes may be defined both globally and on each worker pool.

Recommended base images:

Workers: Cloud-Init

Custom actions may be configured on instance startup in an number of ways depending on the use-case and preferences.

See also:

Global

Cloud init configuration applied to all workers:

worker_cloud_init = [

{

content = <<-EOT

runcmd:

- echo "Global cloud_init using cloud-config"

EOT

content_type = "text/cloud-config",

},

{

content = "/path/to/file"

content_type = "text/cloud-boothook",

},

{

content = "<Base64-encoded content>"

content_type = "text/x-shellscript",

},

]

Pool-specific

Cloud init configuration applied to a specific worker pool:

worker_pools = {

pool_default = {}

pool_custom = {

cloud_init = [

{

content = <<-EOT

runcmd:

- echo "Pool-specific cloud_init using cloud-config"

EOT

content_type = "text/cloud-config",

},

{

content = "/path/to/file"

content_type = "text/cloud-boothook",

},

{

content = "<Base64-encoded content>"

content_type = "text/x-shellscript",

},

]

}

}

Default Cloud-Init Disabled

When providing a custom script that calls OKE initialization:

worker_disable_default_cloud_init = true

Workers: Scaling

There are two easy ways to add worker nodes to a cluster:

- Add entries to

worker_pools. - Increase the

sizeof aworker_poolsentry.

Worker pools can be added and removed, their size and boot volume size can be updated. After each change, run terraform apply.

Scaling changes to the number and size of pools are immediate after changing the parameters and running terraform apply. The changes to boot volume size will only be effective in newly created nodes after the change is completed.

Autoscaling

See Extensions/Cluster Autoscaler.

Examples

Workers: Storage

TODO

Workers: Draining

Usage

worker_pool_mode = "node-pool"

worker_pool_size = 1

# Configuration for draining nodes through operator

worker_drain_ignore_daemonsets = true

worker_drain_delete_local_data = true

worker_drain_timeout_seconds = 900

worker_pools = {

oke-vm-active = {

description = "Node pool with active workers",

size = 2,

},

oke-vm-draining = {

description = "Node pool with scheduling disabled and draining through operator",

drain = true,

},

oke-vm-disabled = {

description = "Node pool with resource creation disabled (destroyed)",

create = false,

},

oke-managed-drain = {

description = "Node pool with custom settings for managed cordon & drain",

eviction_grace_duration = 30, # specified in seconds

is_force_delete_after_grace_duration = true

},

}

Example

Terraform will perform the following actions:

# module.workers_only.module.utilities[0].null_resource.drain_workers[0] will be created

+ resource "null_resource" "drain_workers" {

+ id = (known after apply)

+ triggers = {

+ "drain_commands" = jsonencode(

[

+ "kubectl drain --timeout=900s --ignore-daemonsets=true --delete-emptydir-data=true -l oke.oraclecloud.com/pool.name=oke-vm-draining",

]

)

+ "drain_pools" = jsonencode(

[

+ "oke-vm-draining",

]

)

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

module.workers_only.module.utilities[0].null_resource.drain_workers[0] (remote-exec): node/10.200.220.157 cordoned

module.workers_only.module.utilities[0].null_resource.drain_workers[0] (remote-exec): WARNING: ignoring DaemonSet-managed Pods: kube-system/csi-oci-node-99x74, kube-system/kube-flannel-ds-spvsp, kube-system/kube-proxy-6m2kk, ...

module.workers_only.module.utilities[0].null_resource.drain_workers[0] (remote-exec): node/10.200.220.157 drained

module.workers_only.module.utilities[0].null_resource.drain_workers[0]: Creation complete after 18s [id=7686343707387113624]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Observe that the node(s) are now disabled for scheduling, and free of workloads other than DaemonSet-managed Pods when worker_drain_ignore_daemonsets = true (default):

kubectl get nodes -l oke.oraclecloud.com/pool.name=oke-vm-draining

NAME STATUS ROLES AGE VERSION

10.200.220.157 Ready,SchedulingDisabled node 24m v1.26.2

kubectl get pods --all-namespaces --field-selector spec.nodeName=10.200.220.157

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system csi-oci-node-99x74 1/1 Running 0 50m

kube-system kube-flannel-ds-spvsp 1/1 Running 0 50m

kube-system kube-proxy-6m2kk 1/1 Running 0 50m

kube-system proxymux-client-2r6lk 1/1 Running 0 50m

Run the following command to uncordon a previously drained worker pool. The drain = true setting should be removed from the worker_pools entry to avoid re-draining the pool when running Terraform in the future.

kubectl uncordon -l oke.oraclecloud.com/pool.name=oke-vm-draining

node/10.200.220.157 uncordoned

References

Workers: Node Cycle

Cycling nodes simplifies both the upgrading of the Kubernetes and host OS versions running on the managed worker nodes, and the updating of other worker node properties.

When you set node_cycling_enabled to true for a node pool, OKE will compare the properties of the existing nodes in the node pool with the properties of the node_pool. If any of the following attributes is not aligned, the node is marked for replacement:

kubernetes_versionnode_labelscompute_shape(shape,ocpus,memory)boot_volume_sizeimage_idnode_metadatassh_public_keycloud_initnsg_idsvolume_kms_key_idpv_transit_encryption

The node_cycling_max_surge (default: 1) and node_cycling_max_unavailable (default: 0) node_pool attributes can be configured with absolute values or percentage values, calculated relative to the node_pool size. These attributes determine how OKE will replace the nodes with a stale config in the node_pool.

The node_cycling_mode attribute supports two node cycling modes:

instance- (default) - cycling deletes and recreates a new node with the changes applied.boot_volumecycling swaps the boot volume on the same node.

Notes:

- Only a subset of fields (

kubernetes_version,image_id,boot_volume_size,node_metadata,ssh_public_key,volume_kms_key_id) can be changed withboot_volumecycling. - The cycling operation will attempt to bring all nodes in the NodePool in sync with the NodePool specification. If

boot_volumecycling mode is chosen, and the node needs changes to fields that can not be updated via aboot_volumecycle, the cycle attempt for that node will fail. The cycle_mode has to be changed toinstanceand the node-cycle operation needs to be retried.

When cycling nodes, the OKE cordons, drains, and terminates nodes according to the node pool's cordon and drain options.

Notes:

- It's strongly recommended to use readiness probes and PodDisruptionBudgets to reduce the impact of the node replacement operation.

- This operation is supported only with the

enhancedOKE clusters. - New nodes will be created within the same AD/FD as the ones they replace.

- Node cycle requests can be canceled but can't be reverted.

- When setting a high

node_cycling_max_surgevalue, check your tenancy compute limits to confirm availability of resources for the new worker nodes. - Compatible with the cluster_autoscaler. During node-cycling execution, the request to reduce node_pool size is rejected, and all the worker nodes within the cycled node_pool are annotated with

"cluster-autoscaler.kubernetes.io/scale-down-disabled": "true"to prevent the termination of the newly created nodes. node_cycling_enabled = trueis incompatible with changes to the node_poolplacement_config(subnet_id, availability_domains, placement_fds, etc.)- If the

kubernetes_versionattribute is changed whenimage_type = custom, ensure a compatibleimage_idwith the new Kubernetes version is provided.

Usage

# Example worker pool node-cycle configuration.

worker_pools = {

cycled-node-pool = {

description = "Cycling nodes in a node_pool.",

size = 4,

node_cycling_enabled = true

node_cycling_max_surge = "25%"

node_cycling_max_unavailable = 0

node_cycling_mode = ["instance"] # An alternative value is boot_volume. Only a single mode is supported.

force_node_action = true // *true/false - Applicable when the node cycling mode is "boot_volume".

force_node_delete = true // *true/false - Applicable when the node cycling mode is "instance".

}

}

References

- oci_containerengine_node_pool

- Performing an In-Place Worker Node Update by Cycling Nodes in an Existing Node Pool

- Introducing On Demand Node Cycling for OCI Kubernetes Engine

Load Balancers

Using Dynamic and Flexible Load Balancers

When you create a service of type LoadBalancer, by default, an OCI Load Balancer with dynamic shape 100Mbps will be created.

.You can override this shape by using the {uri-oci-loadbalancer-annotations}[OCI Load Balancer Annotations]. In order to keep using the dynamic shape but change the available total bandwidth to 400Mbps, use the following annotation on your LoadBalancer service:

service.beta.kubernetes.io/oci-load-balancer-shape: "400Mbps"

Configure flexible shape with bandwidth:

service.beta.kubernetes.io/oci-load-balancer-shape: "flexible"

service.beta.kubernetes.io/oci-load-balancer-shape-flex-min: 50

service.beta.kubernetes.io/oci-load-balancer-shape-flex-max: 200

References

Bastion

The bastion instance provides a public SSH entrypoint into the VCN from which resources in private subnets may be accessed - recommended to limit public IP usage and exposure.

The bastion host parameters concern: 0. whether you want to enable the bastion 0. from where you can access the bastion 0. the different parameters about the bastion host e.g. shape, image id etc.

Image

The OS image for the created bastion instance.

Recommended: Oracle Autonomous Linux 8.x

Example usage

create_bastion = true # *true/false

bastion_allowed_cidrs = [] # e.g. ["0.0.0.0/0"] to allow traffic from all sources

bastion_availability_domain = null # Defaults to first available

bastion_image_id = null # Ignored when bastion_image_type = "platform"

bastion_image_os = "Oracle Linux" # Ignored when bastion_image_type = "custom"

bastion_image_os_version = "8" # Ignored when bastion_image_type = "custom"

bastion_image_type = "platform" # platform/custom

bastion_nsg_ids = [] # Combined with created NSG when enabled in var.nsgs

bastion_public_ip = null # Ignored when create_bastion = true

bastion_type = "public" # *public/private

bastion_upgrade = false # true/*false

bastion_user = "opc"

bastion_shape = {

shape = "VM.Standard.E4.Flex",

ocpus = 1,

memory = 4,

boot_volume_size = 50

baseline_ocpu_utilization = "100" # accepted values: 100/50/12.5. Supported only with burstable shapes.

}

Bastion: SSH

Command usage for ssh through the created bastion to the operator host is included in the module's output:

$ terraform output

cluster = {

"bastion_public_ip" = "138.0.0.1"

"ssh_to_operator" = "ssh -J opc@138.0.0.1 opc@10.0.0.16"

...

}

$ ssh -J opc@138.0.0.1 opc@10.0.0.16 kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.1.48.175 Ready node 7d10h v1.25.6

10.1.50.102 Ready node 3h12m v1.25.6

10.1.52.76 Ready node 7d10h v1.25.6

10.1.54.237 Ready node 5h41m v1.25.6

10.1.58.74 Ready node 5h22m v1.25.4

10.1.62.90 Ready node 3h12m v1.25.6

$ ssh -J opc@138.0.0.1 opc@10.1.54.237 systemctl status kubelet

● kubelet.service - Kubernetes Kubelet

Active: active (running) since Tue 2023-03-28 01:48:08 UTC; 5h 48min ago

...

Operator

The operator instance provides an optional environment within the VCN from which the OKE cluster can be managed.

The operator host parameters concern:

- whether you want to enable the operator

- from where you can access the operator

- the different parameters about the operator host e.g. shape, image id etc.

Example usage

create_operator = true # *true/false

operator_availability_domain = null

operator_cloud_init = []

operator_image_id = null # Ignored when operator_image_type = "platform"

operator_image_os = "Oracle Linux" # Ignored when operator_image_type = "custom"

operator_image_os_version = "8" # Ignored when operator_image_type = "custom"

operator_image_type = "platform"

operator_nsg_ids = []

operator_private_ip = null

operator_pv_transit_encryption = false # true/*false

operator_upgrade = false # true/*false

operator_user = "opc"

operator_volume_kms_key_id = null

operator_shape = {

shape = "VM.Standard.E4.Flex",

ocpus = 1,

memory = 4,

boot_volume_size = 50

baseline_ocpu_utilization = "100" # accepted values: 100/50/12.5. Supported only with burstable shapes.

}

Operator: Cloud-Init

Custom actions may be configured on instance startup in an number of ways depending on the use-case and preferences.

See also:

Cloud init configuration applied to the operator host:

operator_cloud_init = [

{

content = <<-EOT

runcmd:

- echo "Operator cloud_init using cloud-config"

EOT

content_type = "text/cloud-config",

},

{

content = "/path/to/file"

content_type = "text/cloud-boothook",

},

{

content = "<Base64-encoded content>"

content_type = "text/x-shellscript",

},

]

Operator: Identity

instance_principal

Instance_principal is an IAM service feature that enables instances to be authorized actors (or principals) to perform actions on service resources. Each compute instance has its own identity, and it authenticates using the certificates that are added to it. These certificates are automatically created, assigned to instances and rotated, preventing the need for you to distribute credentials to your hosts and rotate them.

Dynamic Groups group OCI instances as principal actors, similar to user groups. IAM policies can then be created to allow instances in these groups to make calls against OCI infrastructure services. For example, on the operator host, this permits kubectl to access the OKE cluster.

Any user who has access to the instance (who can SSH to the instance), automatically inherits the privileges granted to the instance. Before you enable this feature, ensure that you know who can access it, and that they should be authorized with the permissions you are granting to the instance.